This can be achieved in multiple ways, as shown below. Real-time data synchronization needs to happen immediately following the one-time load process. Note: The $out operator will overwrite any existing data in the specified S3 location, so make sure to use a unique destination folder or bucket to avoid unintended data loss. Make sure to replace, and with the appropriate values for your S3 bucket and desired destination folder. Use the $out operator to export the data to an S3 bucket. Replace db_name and collection_name with actual values and verify the data exists by running the above command. Specify the database and collection that you want to export data from using the following commands. Mongosh "mongodb+srv://" -username username This command prompts you to enter the password. Now you've successfully configured S3 bucket with Atlas Data Federation.Ĭonnect to your MongoDB instance using the MongoDB shell. Your role policy for read-only or read and write access should look similar to the following:ĭefine the path structure for your files in the S3 bucket and click Next.

Follow the steps in the Atlas user interface to assign an access policy to your AWS IAM role.Assign an access policy to your AWS IAM role.Choose Read and write, to be able to write documents to your S3 bucket.

#Lakehouse databricks paper how to

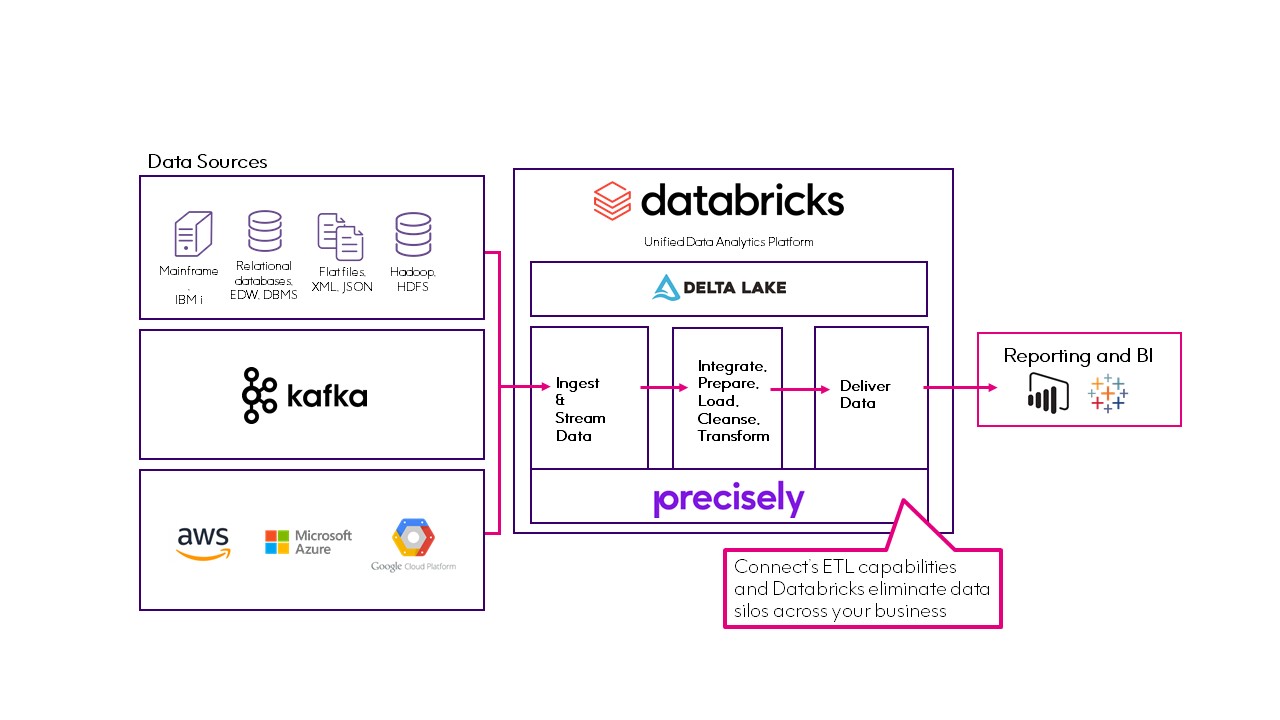

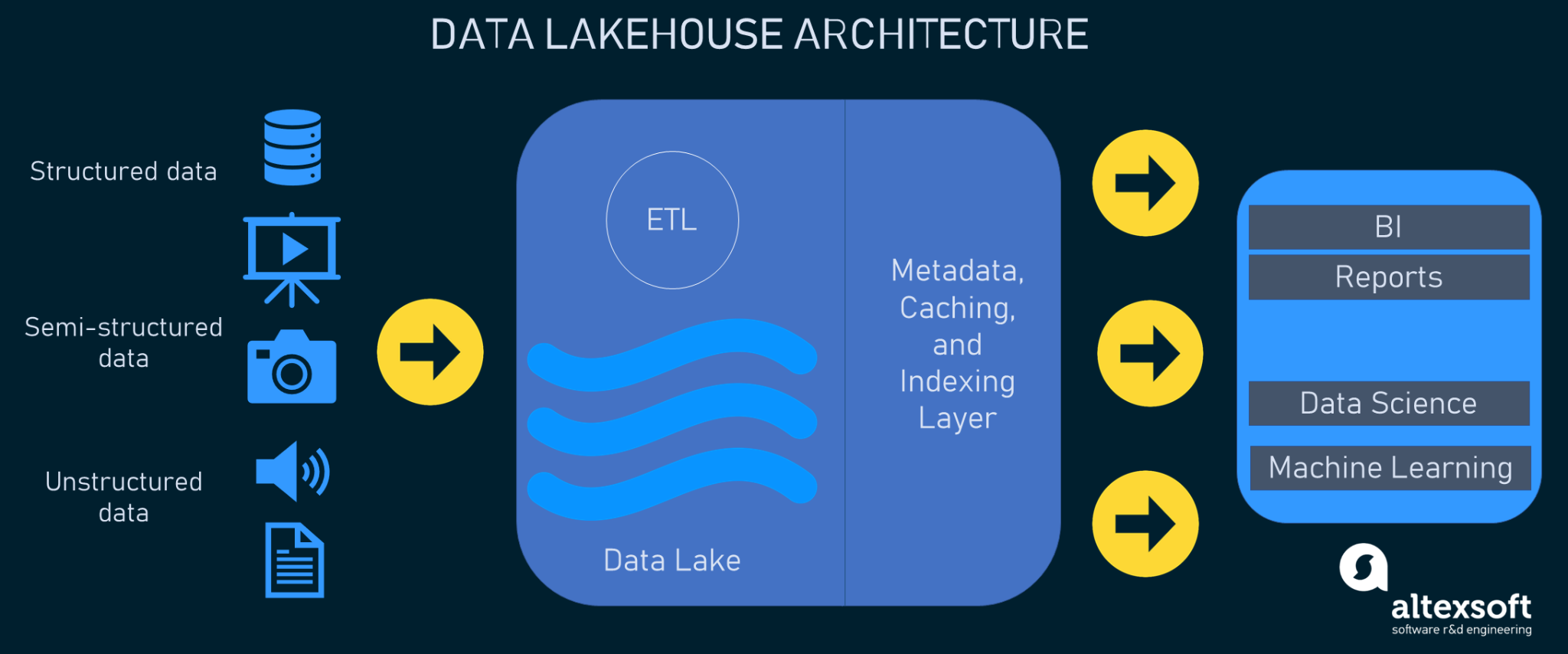

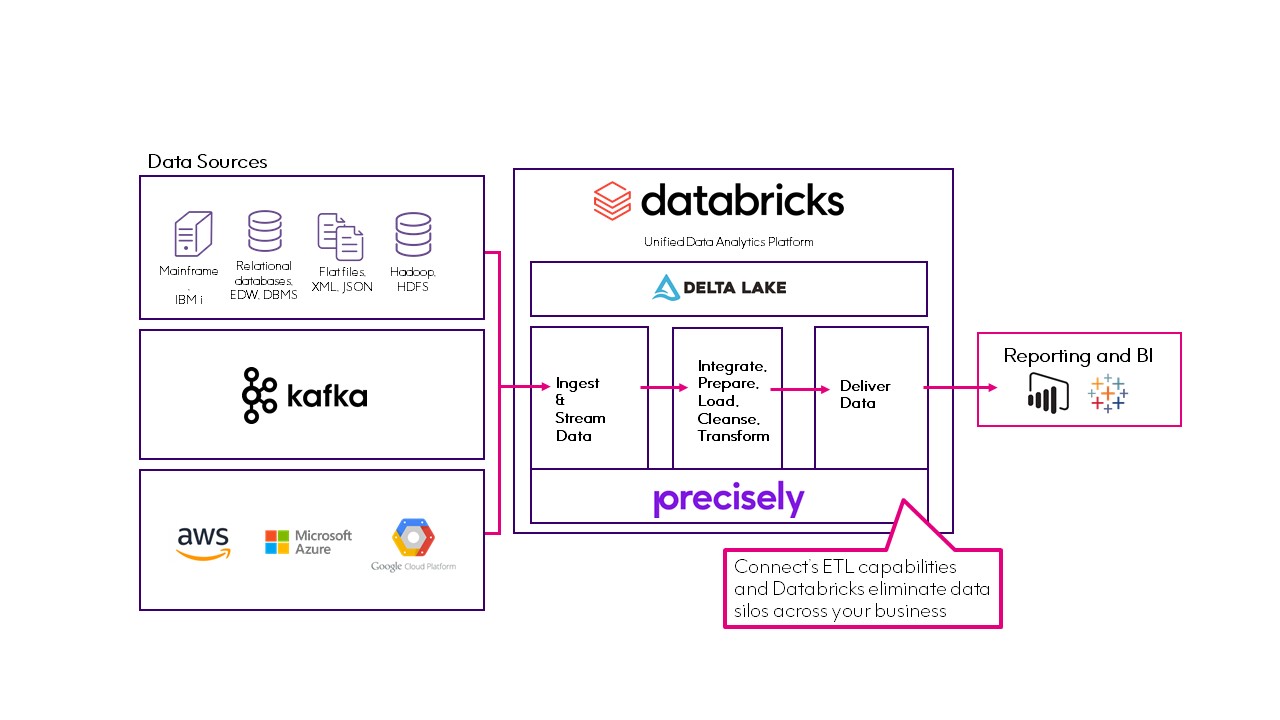

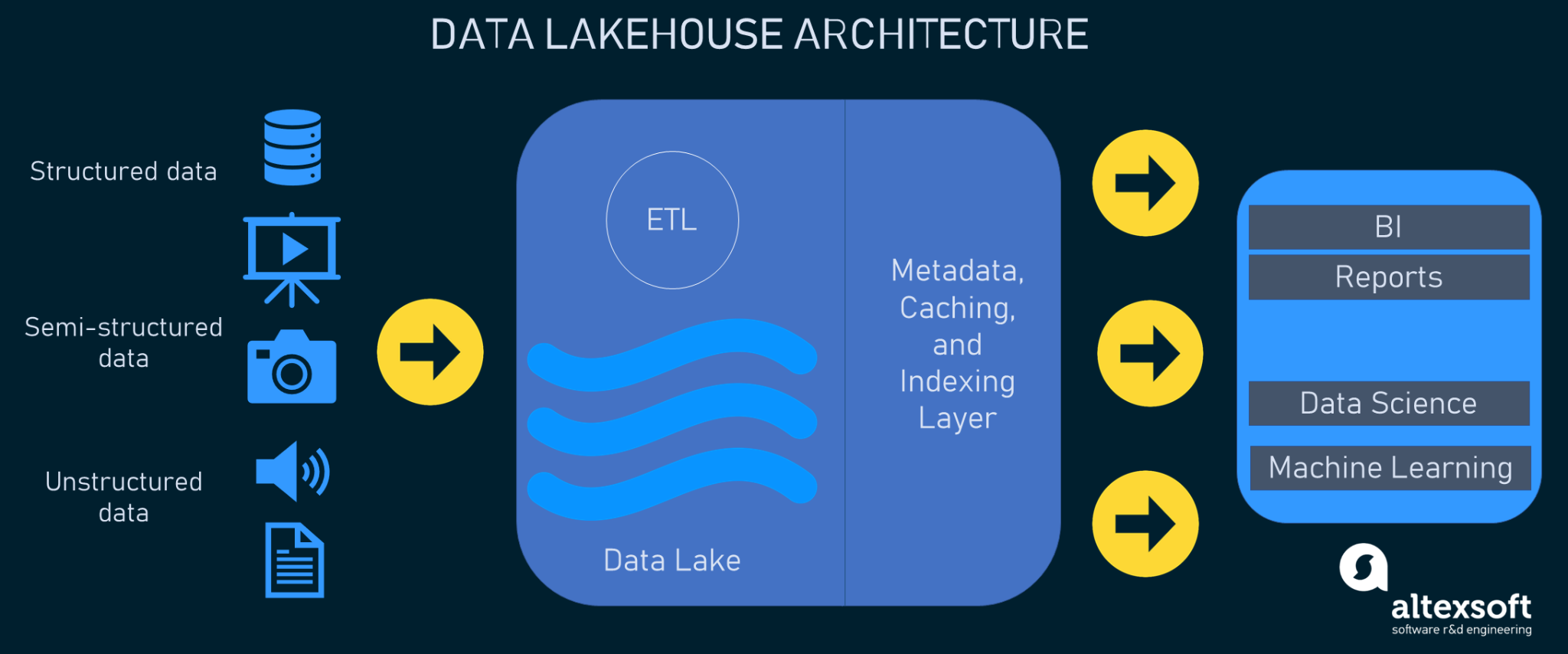

If you are authorizing Atlas for an existing role or are creating a new role, be sure to refer to the documentation for how to do this. If you created a role that Atlas is already authorized to read and write to your S3 bucket, select this user. The data from MongoDB Atlas can be moved to Delta Lake in the following ways: These Insights can be moved back to MongoDB Atlas so they can reach the right audience at the right time to be actioned. Data from MongoDB Atlas can be moved to Delta Lake in batch/real-time and can be aggregated with historical data and other data sources to perform long-running analytics and complex machine learning pipelines. It is the foundation of a scalable lakehouse solution for complex analysis. Integration architectureĭatabricks Delta Lake is a reliable and secure storage layer for storing structured and unstructured data that enables efficient batch and streaming operations in the Databricks Lakehouse. In this blog post, we will deep dive on the various ways to integrate MongoDB Atlas and Databricks for a complete solution to manage and analyze data to meet the needs of modern business. In our previous blog post “ Start your journey-operationalize AI enhanced real-time applications: mongodb-databricks” we discussed how MongoDB Atlas and the Databricks Lakehouse Platform can complement each other in this context. To overcome the above challenges, enterprises need a solution that can easily handle high transaction volume, paired with a scalable data warehouse (increasingly known as a "lakehouse") that performs both traditional Business Intelligence (BI) and advanced analytics like serving Machine Learning (ML) models. This performance hit becomes a significant bottleneck as the data grows into terabytes and petabytes. They also slow turnaround speeds due to high maintenance and scaling issues. They silo data across multiple databases and data warehouses. Analytical solutions need to evolve constantly to meet this demand of these changing needs, but legacy systems struggle to consolidate the data necessary to service these business needs.

Modern business demands expedited decision-making, highly-personalized customer experiences, and increased productivity. In this post, we'll cover the different ways to integrate these systems, and why. In a previous post, we talked briefly about using MongoDB and Databricks together.

0 kommentar(er)

0 kommentar(er)